The Kubernetes platform’s utility for automating deployments, scaling and management of containerized applications is widely recognized. But lest we forget, these capabilities are accessed through a critical component: the API server that is also known as kube-apiserver within a cluster.

As the central point for all communication within the cluster, it‘s a good idea to thoroughly understand how the Kubernetes API functions. This applies whether you are a developer, operator or cluster administrator and regardless of whether you are a Kubernetes rookie or expert.

This article covers the architecture, role and importance of the Kubernetes API server and its core API and extensions API.

Why the Kubernetes API is Important

The Kubernetes API is important for developers and operations teams because it enables:

- Automation and Customization: DevOps teams can build automation scripts that interact with the Kubernetes API to deploy, manage and monitor applications and resources. This know-how allows teams to build tailored solutions that address specific requirements and workflows such as CI/CD, while enhancing the efficiency and capabilities of their Kubernetes deployments.

- Integration with External Systems: Using the Kubernetes API, developers can integrate Kubernetes with external systems such as CI/CD pipelines, monitoring tools and security solutions, and network facilities including DNS and Load Balancers. The integration enables teams to streamline their processes, improve observability, leverage non-cloud native systems and ensure consistency across their infrastructure.

- Debugging and Troubleshooting: Knowing how to interact with the Kubernetes API helps developers and operations teams quickly investigate and troubleshoot issues within their Kubernetes cluster. The API provides access to detailed information about the state of resources, events and logs, which are crucial for identifying and resolving problems.

- Deeper Understanding of Kubernetes: Thorough knowledge of the Kubernetes API allows developers and operations teams to gain a deeper understanding of the inner workings of Kubernetes and its underlying concepts. This knowledge is invaluable when designing, deploying and optimizing workloads on the Kubernetes platform.

- Extensibility: Kubernetes is designed to be extensible, allowing developers to create custom resources and extend the platform’s functionality. Understanding the Kubernetes API is essential for implementing and working with custom resource definitions (CRDs), which form the basis of extending Kubernetes to support domain-specific use cases or additional features.

Overall, knowing how to use the Kubernetes API empowers developers and operations teams to build, deploy and manage applications on Kubernetes effectively, driving cloud native benefits, increasing Kubernetes return on investment, and making teams more productive and valuable members of their organizations.

Kubernetes Architecture

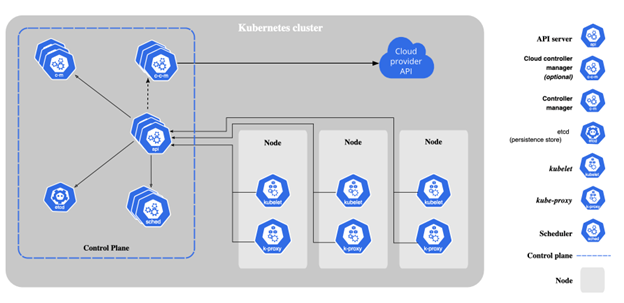

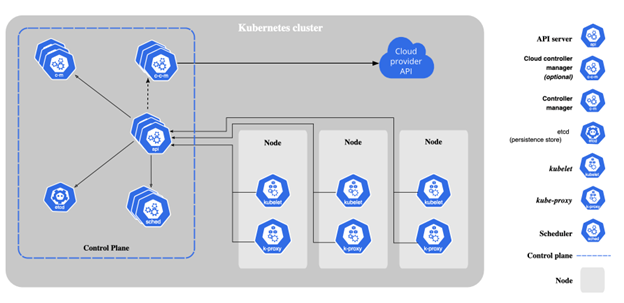

A typical Kubernetes cluster has two key components: the control plane and the worker nodes.

The control plane is the digital nervous system of a Kubernetes cluster. It decides which worker node or nodes will run the workload based on resource availability and workload characteristics through the scheduler component. The controller manager ensures that the desired state of the cluster resources is always maintained.

The worker nodes are the workhorses of the cluster where containerized workloads are scheduled. They are responsible for managing the lifecycle of each container deployed as a part of the workload. The API server manages the lifecycle of pods while the kubelet running on each node manages the containers that are a part of the pod.

A pod is the smallest and most basic unit of deployment. It represents a single instance of a running process within the cluster. It can be considered a logical host containing one or more containers, storage resources and network configurations. A pod is assigned to a worker node in the cluster based on resource requirements and constraints, including CPU, memory and storage. This ensures efficient co-location of the containers on the node.

A highly available key-value database called “etcd” is used to store the cluster configuration that acts as a single source of truth for the entire cluster. An optional component, the cloud controller manager, interacts with the external infrastructure resources through the API available from the cloud provider. This component bridges the gap between the Kubernetes control plane and the underlying elastic infrastructure managed by the cloud provider. For example, the cloud controller manager handles auto scaling of the nodes by adding or removing VMs from the cluster based on the average CPU utilization.

Source: The Kubernetes Authors

At the center of the control plane is the API server, managing the internal and external operations. It exposes the API used by the internal components of the control plane and also the external tools. Thanks to the loosely coupled architecture, the Kubernetes API server can be distributed across multiple hosts to achieve horizontal scalability and balance the incoming traffic.

The Role of the Kubernetes API

As Kubernetes continues to evolve, the API supports newer functionality while maintaining backward compatibility with older tools and applications through a well-defined versioning system.

Kubernetes utilizes a 3-part versioning system to denote the API server’s version: <major>.<minor>.<patch>. The version names may include alpha, beta or an integer such as v1alpha1, v2beta3 or v1. The version with just a number with no alpha or beta suffix indicates that it’s the most stable and compatible version of the API.

Understanding how the API server is versioned becomes crucial, since it determines how compatible the server is with client applications or tools that interact with the cluster. This approach of versioning the API ensures that client applications can select the correct version to communicate with the API server, and utilize its features and capabilities.

None of the internal components of a Kubernetes cluster that are a part of the control plane have direct access to etcd, the persistence layer. To update the state, they must interact with the API. The design ensures a consistent mechanism for storing and maintaining the cluster’s desired state.

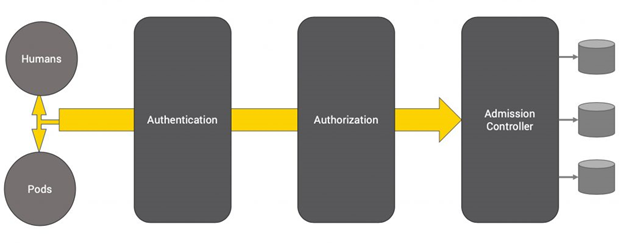

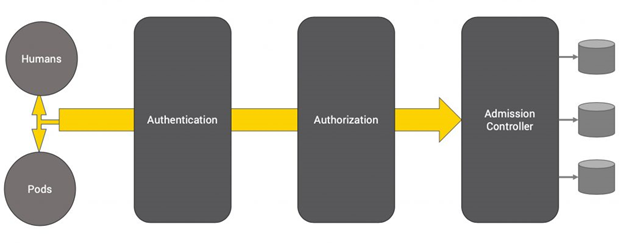

The API server can be broken down into three main layers:

- Authentication: This layer is responsible for verifying the identity of users accessing the API server. Kubernetes supports multiple authentication methods, including client certificates, bearer tokens and more.

- Authorization: Once a user’s identity is established, the authorization layer determines whether the user has the necessary permissions to perform the requested action. Kubernetes uses role-based access control (RBAC) to define and manage permissions.

- Admission Control: The admission controller provides a pluggable architecture to accept or reject an API request. It supports a set of customizable plugins that intercept requests after authentication and authorization. These plugins can enforce custom policies, modify the requested objects or reject requests that do not comply with specific rules. Even if a request passes through the authentication and authorization layers, a policy defined by the admission controller can decline the request.

It’s important to understand that users and service accounts accessing the API go through the same authentication and authorization process.

The modular design of API enables RBAC to define fine-grained policies that enable or disable access to Kubernetes resources. To understand how this works, let’s take a look at the API groups supported by Kubernetes.

Understanding Core API and Extensions API

The Kubernetes API is divided into two main categories: the core API and the extensions API.

The core API includes the fundamental building blocks of Kubernetes, such as pods, services and nodes. It defines the primary resources required to manage the lifecycle of containerized applications within the cluster.

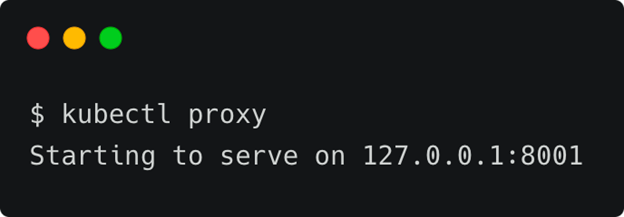

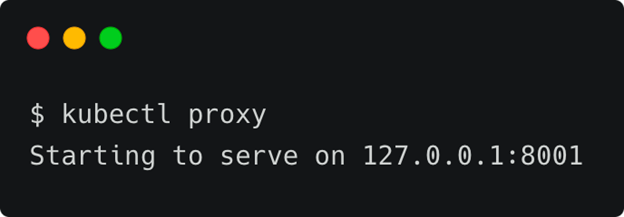

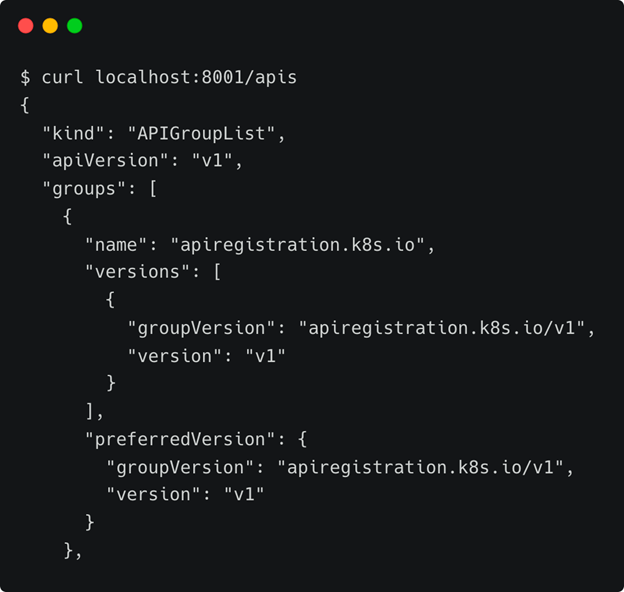

To query the existing APIs, first you need to open a tunnel through the proxy with kubectl pointed to a valid Kubernetes cluster.

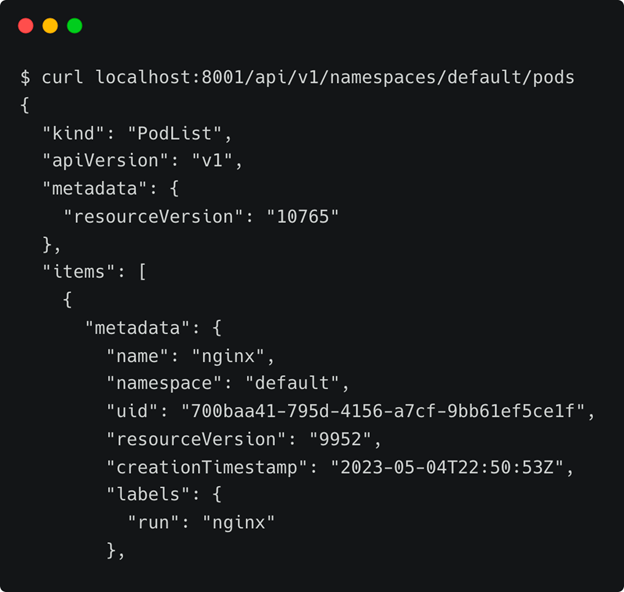

In a different terminal window, you can access the API server through the /api endpoint, which lists resources belonging to the core API group.

For example, to access an NGINX pod running in the default namespace, you can run the below command:

The extensions API comprises additional resources and functionality that extend the capabilities of Kubernetes. These APIs are generally optional and can be enabled or disabled depending on the specific requirements of the cluster. Some examples of extension APIs include:

- Custom Resource Definitions (CRDs): CRDs allow users to define and manage custom resources within the cluster. This enables the management of tailored resources for specific use cases.

- API Aggregation: API aggregation enables users to add new API controllers to the Kubernetes control plane. This allows for the extension of the Kubernetes API with new APIs that are not part of the core API.

- Admission Controllers: As mentioned earlier, admission controllers are plugins that can enforce custom policies or modify resources during the initial phase. Custom admission controllers can be written to allow/deny the creation of resources based on simple declarative rules or sophisticated policies maintained externally.

The Learning Path

Acquiring the skills and expertise to master the use of the Kubernetes API necessitates hands-on practice. You obviously do not want to learn by working with a live cluster in a working production environment, so you can gain the requisite muscle memory by working in a sandbox that the KubeCampus Kubernetes Learning Site provides — for free.

If you are new to Kubernetes and the Kubernetes API, we recommend that you start with Build Your First Kubernetes Cluster: Course 1. This lab is the prerequisite for the Build a Kubernetes Application: Course 2 and Course 3 Labs.

The centralized control that the Kubernetes API offers means that it’s critical to secure access through zero trust policies, the use of secrets and other security best practices. If you are completely new to Kubernetes security, Introduction to Kubernetes Security is a great place to start. Kubernetes Security — Intermediate Skills provides hands-on practice with secrets management, as well as other Kubernetes security topics including authentication, RBAC management, runtime security and pod-security standards.

An API used to manage disaster recovery is an extension of the Kubernetes API. The Kubernetes backup and recovery solution Kasten K10 exposes an API based on Kubernetes CRDs. While not directly connected to Kubernetes, the backup and disaster recovery system begins with control of the Kubernetes API.

The KubeCampus storage and disaster-recovery learning path begins with Storage and Applications in Kubernetes and Backup Your Kubernetes Application labs. This lab is a prerequisite for Application Consistency that offers more in-depth hands-on practice using Kasten K10 for Kubernetes-native application backup and recovery.